最近基于netty在做一个项目,在做压测时出现了io.netty.util.internal.OutOfDirectMemoryError,出现大量异常信息:

2018-06-22 14:24:37.875 ERROR 5381 --- [-] [ntLoopGroup-7-3] c.e.c.s.c.s.h.h.HttpProxyServerHandler : exception happens, clientId: 1aa0892c

io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 251658247, max: 268435456)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:615)

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:569)

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:764)

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:740)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:244)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:214)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:146)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:324)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:181)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:172)

at io.netty.buffer.AbstractByteBufAllocator.ioBuffer(AbstractByteBufAllocator.java:133)

at io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:80)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:122)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

看异常信息可以看到堆外内存共256M,已经使用240M,加上继续分配16M已经达到了最大值。

通过上诉异常栈,进行一些简单的分析:

分析1:查看异常对应的代码(PlatformDependent.java)可以发现,堆内存的最大限制是读取的JVM参数-XX:MaxDirectMemorySize,然后赋值给常量DIRECT_MEMORY_LIMIT

private static void incrementMemoryCounter(int capacity) {

if (DIRECT_MEMORY_COUNTER != null) {

for (;;) {

long usedMemory = DIRECT_MEMORY_COUNTER.get();

long newUsedMemory = usedMemory + capacity;

if (newUsedMemory > DIRECT_MEMORY_LIMIT) {

throw new OutOfDirectMemoryError("failed to allocate " + capacity

+ " byte(s) of direct memory (used: " + usedMemory + ", max: " + DIRECT_MEMORY_LIMIT + ')');

}

if (DIRECT_MEMORY_COUNTER.compareAndSet(usedMemory, newUsedMemory)) {

break;

}

}

}

}

看了下自己jvm启动参数XX:MaxDirectMemorySize确实配置的是256M。

分析2

异常栈里显示的PoolArena是netty中的内存池的实现类。为了集中管理内存的分配和释放,提高分配释放内存的性能,netty预先分配一个大块的内存空间来实现一个内存池,使用时会拆分成各个小块的进行分配使用。所以猜测是内存池中对象被分配使用后未进行回收,导致不断新增对象直到到达JVM限制的临界值。

光有这2点信息,不太好排查出问题具体出在哪里。于是我开始用google检索相关信息,最后找到了netty官方关于ByteBuf对象引用计数的讲解:http://netty.io/wiki/reference-counted-objects.html

文章里说明了如果引用计数使用不当,会导致Memory Leak,并给了内存泄露原因的排查方式。然后我试着在log中grep关键词LEAK发现了有一条error(由于最上面的那个异常栈信息刷的太多,导致我开始没注意到该ERROR log)。

2018-06-25 17:39:56.616 ERROR 18059 --- [-] [ntLoopGroup-5-1] io.netty.util.ResourceLeakDetector : LEAK: ByteBuf.release() was not called before it's garbage-collected. Enable advanced leak reporting to find out where the leak occurred. To enable advanced leak reporting, specify the JVM option '-Dio.netty.leakDetection.level=advanced' or call ResourceLeakDetector.setLevel() See http://netty.io/wiki/reference-counted-objects.html for more information.

看提示要在JVM参数中添加-Dio.netty.leakDetection.level=advanced来获取更多的异常信息,通过查询文档得知级别信息,默认SIMPLE会告诉你有leak,但是不会从buffers中进行采样分析,ADVANCED会从buffers中取1%进行采样分析告诉你leak的原因。

There are currently 4 levels of leak detection:

DISABLED - disables leak detection completely. Not recommended.

SIMPLE - tells if there is a leak or not for 1% of buffers. Default.

ADVANCED - tells where the leaked buffer was accessed for 1% of buffers.

PARANOID - Same with ADVANCED except that it's for every single buffer. Useful for automated testing phase. You could fail the build if the build output contains 'LEAK:'.

我在jvm参数里加上-Dio.netty.leakDetection.level=advanced后开始重现bug,为了尽快重现bug,我将jvm直接内存限制调小到26m(-XX:MaxDirectMemorySize=26m)

很快bug重现了,再次得到异常信息如下,这时后详细多了,不过又出现一个提示我们1条leak record被丢弃了,因为限制leak record为4,可以修改io.netty.leakDetection.maxRecords参数来进行配置。

2018-06-25 16:09:34.647 ERROR 16469 --- [-] [ntLoopGroup-5-4] io.netty.util.ResourceLeakDetector : LEAK: ByteBuf.release() was not called before it's garbage-collected. See http://netty.io/wiki/reference-counted-objects.html for more information.

WARNING: 1 leak records were discarded because the leak record count is limited to 4. Use system property io.netty.leakDetection.maxRecords to increase the limit.

Recent access records: 4

#4:

io.netty.buffer.AdvancedLeakAwareByteBuf.readByte(AdvancedLeakAwareByteBuf.java:396)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyHeader.readLine(HttpProxyHeader.java:105)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyHeader.digest(HttpProxyHeader.java:46)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyServerHandler.channelRead(HttpProxyServerHandler.java:42)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#3:

Hint: 'HttpProxyServerHandler#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#2:

Hint: 'LoggingHandler#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#1:

Hint: 'DefaultChannelPipeline$HeadContext#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

Created at:

io.netty.util.ResourceLeakDetector.track(ResourceLeakDetector.java:237)

io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:331)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:181)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:172)

io.netty.buffer.AbstractByteBufAllocator.ioBuffer(AbstractByteBufAllocator.java:133)

io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:80)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:122)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

于是我又改了下jvm参数,加上 -Dio.netty.leakDetectio.maxRecords=10

最终得到完整的异常信息:

2018-06-25 17:52:46.806 ERROR 18384 --- [-] [ntLoopGroup-5-3] io.netty.util.ResourceLeakDetector : LEAK: ByteBuf.release() was not called before it's garbage-collected. See http://netty.io/wiki/reference-counted-objects.html for more information.

Recent access records: 5

#5:

io.netty.buffer.AdvancedLeakAwareByteBuf.readByte(AdvancedLeakAwareByteBuf.java:396)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyHeader.readLine(HttpProxyHeader.java:105)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyHeader.digest(HttpProxyHeader.java:46)

com.enniu.cloud.services.connection.server.httpproxy.handlers.HttpProxyServerHandler.channelRead(HttpProxyServerHandler.java:42)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#4:

Hint: 'HttpProxyServerHandler#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#3:

Hint: 'LoggingHandler#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#2:

Hint: 'DefaultChannelPipeline$HeadContext#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:107)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:345)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

#1:

io.netty.buffer.AdvancedLeakAwareByteBuf.writeBytes(AdvancedLeakAwareByteBuf.java:630)

io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:372)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:123)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

Created at:

io.netty.util.ResourceLeakDetector.track(ResourceLeakDetector.java:237)

io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:331)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:181)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:172)

io.netty.buffer.AbstractByteBufAllocator.ioBuffer(AbstractByteBufAllocator.java:133)

io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:80)

io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:122)

io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644)

io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:579)

io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:496)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

java.lang.Thread.run(Thread.java:745)

上诉异常信息提示泄漏的ByteBuf从 LoggingHandler→HttpProxyServerHandler, 最终在HttpProxyServerHandler中出现问题。观察HttpProxyServerHandler代码,发现确实没有release读进来的ByteBuf对象。

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ByteBuf original = (ByteBuf) msg;

if (isFirstRead) {

HttpProxyHeader httpProxyClientHeader = new HttpProxyHeader();

try {

httpProxyClientHeader.digest(original);

} catch (Exception e) {

log.error("http proxy request header is illegal, {}", e.getMessage());

ctx.close().addListener(CLOSE_ON_FAILURE);

return;

} //##############这里没有release original###########

....

....

}

修复方案,在finally语句块里添加ReferenceCountUtil.release(original);

try {

httpProxyClientHeader.digest(original);

} catch (Exception e) {

log.error("http proxy request header is illegal, {}", e.getMessage());

ctx.close().addListener(CLOSE_ON_FAILURE);

return;

} finally {

ReferenceCountUtil.release(original); //加个引用计数release

}

然后再重新进行压测,未出现OOM问题,bug得以解决。

那么问题来了,为啥引用计数不-1会导致OOM呢?

我DEBUG看了下channelRead方法读进来的Object msg类型是PooledUnsafeDirectByteBuf,看了下释放内存的方法为其父类PooledByteBuf中的deallocate()方法。

@Override

protected final void deallocate() {

if (handle >= 0) {

final long handle = this.handle;

this.handle = -1;

memory = null;

tmpNioBuf = null;

chunk.arena.free(chunk, handle, maxLength, cache);

chunk = null;

recycle();

}

}

那么deallocate()方法在跟release()方法又有什么关系呢?

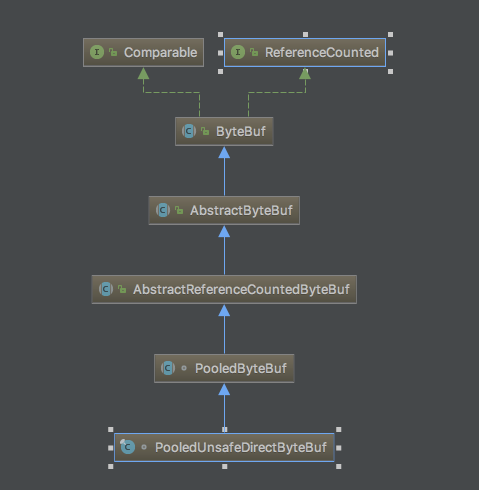

看下PooledUnsafeDirectByteBuf继承类的结构:

调用的release()方法在 父类AbstractReferenceCountedByteBuf中,可以看到确实在release时调用了deallocate,至此应该弄清楚原因了,分析2中的猜测【内存池中对象被分配使用后未进行回收,导致不断新增对象直到到达JVM限制的临界值】是正确的。

@Override

public boolean release() {

return release0(1);

}

@Override

public boolean release(int decrement) {

return release0(checkPositive(decrement, "decrement"));

}

private boolean release0(int decrement) {

for (;;) {

int refCnt = this.refCnt;

if (refCnt < decrement) {

throw new IllegalReferenceCountException(refCnt, -decrement);

}

if (refCntUpdater.compareAndSet(this, refCnt, refCnt - decrement)) {

if (refCnt == decrement) {

deallocate();

return true;

}

return false;

}

}

}

参考: